At Mozilla, we know we canТt create a better future alone, that is why each year we will be highlighting the work of 25 digital leaders using technology to amplify voices, effect change, and build new technologies globally through our Rise 25 Awards. These storytellers, innovators, activists, advocates, builders and artists are helping make the internet more diverse, ethical, responsible and inclusive. This week, we chatted with Julia Janssen, an artist making our digital societyТs challenges of data and AI tangible through the form of art. We talked with Julia about what sparked her passion for art, her experience in art school, traveling the world, generative AI and more. IТm always curious to ask artists what initially sparked their interest early in their lives, or just in general, what sparked their interest in the art that they do. How was that for you? Janssen: Well, it’s actually quite an odd story, because when I was 15 years old, and I was in high school Ч at the age of 15, you have to choose the kind of the courses or the direction of your classes, Ч and I chose higher mathematics and art history, both as substitute classes. And I remember my counselor asked me to his office and said, Уwhy?Ф Like, Уwhy would you do that like? Difficult mathematics and art and art history has nothing to do with each other.Ф I just remember saying, like, УWell, I think both are fun,Ф but also, for me, art is a way to understand mathematics and mathematics is for me a way to make art. I think at an early age, I kind of noticed that it was both of my interests. So I started graphic design at an art academy. I also did a lot of different projects around kind of our relationship with technology, using a lot of mathematics to create patterns or art or always calculating things. And I never could kind of grasp a team or the fact that technology was something I was so interested in, but at graduation in 2016, it kind of all clicked together. It all kind of fell into place. When I started reading a lot of books about data ownership rights and early media theories, I was like, Уwhat the hell is happening? Why aren’t we all constantly so concerned about our data? These big corporations are monetizing our attention. We’re basically kind of enslaved by this whole data industry.Ф ItТs insane what’s happening, why is everybody not worried about this?Ф So, I made my first artwork during my graduation about this. And looking back at the time, I noticed that’s a lot of work during art school was already kind of understanding this Ч for example, things like terms and conditions. I did a Facebook journal where it was kind of a news anchor of a newsroom, reading out loud timelines and all these things. So I think it was already present in my work, but in 2016, it all kind of clicked together. And from there, things happened. I found that I learned so much more by actually being in the field and actually doing internships, etc. during college instead of just sitting in the classroom all day. Did you kind of have a similar experience in art school? WhatТs that like? Yeah, for sure. I do have some criticisms of how artists teach in schools, I’m not sure if it’s a worldwide thing, but from what I experienced, for example, is that the outside world is a place of freedom Ч you can express yourself, you can do anything you want. But I also noticed that, for example, privacy data protection was not a typical topic of interest at the time, so most of my teachers didnТt encourage me to kind of stand up for this. To research this, or at least in the way that I was doing it. Or theyТd say, Уyou can be critical to worse tech technology, but saying that privacy is a commodity, that’s not done.Ф So I felt like, yes, It is a great space, and I learned a lot, but it’s also sometimes a little bit limited, I felt, on trending topics. For example, when I was graduating, everybody was working with gender identities and sustainability, those kinds of these things. And everybody’s focused on it and this was kind of the main thing to do. So I feel like, yeah, there should be more freedom in opening up the field for kind of other interests. It’s kind of made me notice that all these kids are worrying so much about failing a grade, for example. But later on in the real world, it’s about surviving and getting your money and all these other things that can go wrong in the process. Go experiment and go crazy, the only thing that you can do is fail a class. It’s not so bad. ThereТs a system in place that has been there forever, and so I imagine there’s got to feel draining sometimes and really difficult to break through for a lot of young artists. Yeah, for sure. And I think that my teachers werenТt outdated Ч most of them were semi-young artists in the field as well and then teaching for one day Ч but I think there is also this culture of Уthis is how we do it. And this is what we think is awesome.Ф What I try to teach Ч so I teach there for, like 6 months as a substitute teacher Ч I try to encourage people and let them know that I can give you advice and I can be your guide into kind of helping you out with whatever you want, and if I’m not interested in what particularly what you want to, for example, say in a project, then I can help you find a way to make it awesome. How to exhibit it, how to make it bigger or smaller, or how to place it in a room.  I’m curious to know for you as an artist, when you see a piece of art, or you see something, what is the first thing that you look for or whatТs something that catches your attention that inspires you when you see a piece of art? Honestly, it’s the way that is exhibited. I’m always very curious and interested in ways that people display research or kind of use media to express their work. When I’m walking to a museum, I get more enthusiastic from all the kind of things like the mechanics behind kind of hanging situations, and sometimes the work that is there to see, also to find inspirations to do things myself and what I find most thrilling. But also, I’m not just an artist who just kind of creates beautiful things, so to say, like I need information, data, mathematics to start even creating something. And I can highly appreciate people who can just make very beautiful emotional things that just exist. I think that’s a completely different type of art. You’ve also have traveled a lot, which is one of the best ways for people to learn and gain new perspectives. How much has traveling the world inspire you in the work that you create? I think a good example was last year when I went to Bali, which was actually kind of the weirdest experience in my life, because I hated the place. But it actually inspired a lot of things for my new project, mapping the oblivion, which I also talk about in my Rise25 video. Because what I felt in Bali was that this is just like such a beautiful island, such beautiful culture and nature, and it’s completely occupied by tourists and tourist traps and shiny things to make people feel well. ItТs kind of hard to explain what I felt like there, but for example, I was there and what I like to do on a holiday or on a trip is just a lot of hiking, just going into nature, and just walk and explore and make up my mind or not think about anything. In Bali, everything is designed to feel at comfort or at your service, and that just felt completely out of place for me. But I felt maybe this is something that I need to embrace. So looking on Google Maps, I got this recommendation to go to a three-floor swimming pool. It was awesome, but it was also kind of weird, because I was looking like out on the jungle, and I felt like this place really completely ruined this whole beautiful area. It clicked with what I was researching about platformification and frictionlessness, where these platforms or technology or social media timelines try to make you at ease and comfortable, and making decisions be effortless. So on music apps, you click on УJazzy VibesФ and it will kind of keep you satisfied with some Jazz vibes. But you will not be able to really explore kind of new things. It just kind of goes, as the algorithm goes. But it gives you a way of feeling of being in control. But it’s actually a highly curated playlist based on their data. But what I felt was happening in Bali was that I wanted to do something else, but to make myself more at comfort to kind of find the more safe option, I chose that swimming pool instead of exploring. And I did it based on a recommendation of an app. And then I felt constantly in-between. I actually want to go to more local places, but I had questions Ч What do I find out, is it safe there to eat there? I should’ve gone to easier places where I’ll probably meet peers and people who I can have a conversation or beer with. But then you go for the easier option, and then I felt I was drifting away from doing unexpected things and exploring what is there. I think thatТs just a result of the technology being built around us and kind of conforming us with news that we probably might be interested in seeing, playing some music, etc. but what makes us human in the end is to feel discomfort, to be in awkward positions, to kind of explore something that is out there and unexpected and weird. I think that’s what makes the world we live in great. Yeah, that’s such a great point. ThereТs so many people that just don’t naturally and organically explore a city that they’re in. They are always going there and looking at recommendations and things like that. But a lot of times, if you just go and get lost in it, you can see a place for what it is, and it’s fun to do that. ItТs also kind of the whole fear of missing out on that one special place. But I feel like you’re missing out on so much by constantly looking at the screen and finding recommendations of what you should like or what you should feel happy about. And I think this just kind of makes you blind to everything out there, and it also makes us very disconnected with our responsibility of making choices. What do you think is the biggest challenge that we face this year online, or as a society in the world, and how do we combat that? I mean, for me, I think most of the debate about generative AI is about taking our jobs or taking the job of creatives or writers, lawyers. I think the more fundamental question that we have to ask ourselves with generative AI is about how we will live with it, and still be human. Do we just allow what it can do or do we also take some measurements in what is desirable that it will replace? Because I feel like if we’re just kind of outsourcing all of our choices into this machine, then what the hell are we doing here? We need to find a proper relationship. I’m not saying I’m really not against technology not at all, I also see all the benefits and I love everything that it is creating Ч although not everything. But I think it’s really about creating this healthy relationship with the applications and the technology around us, which means that sometimes you have to chose friction and do it yourself and be a person. For example, if you are now graduating from university, I think it will be a challenge for students to actively choose to write their own thesis and not just generated by Chat GPT and setting some clever parameters. I think small challenges are something that we are currently all facing, and fixing them is something we have to want. What do you think is a simple action everyone can take to make the world online and offline a little better? Here in Europe, we have the GDPR. And it says that we have the right to data access and that means that you can ask a company to show the data that they collected about you, and they have to show you within 30 days. I do also a lot of teaching in workshops, at schools or at universities with this, showing how to request your own data. You get to know yourself a different way, which is funny. I did a lot of projects around this by making this in art installations. But I think this a very simple act to perform, but it’s kind of interesting to see, because this is only the raw data Ч so you still don’t know how they use it in profiling algorithms, but it gives you clarity on some advertisements that you see that you don’t understand, or just kind of understanding the skill of what they are collecting about in every moment. So that is something that I highly encourage, or also another thing that’s in line with that is УThe right to be forgotten,Ф which is also a European right. We started to Rise25 to celebrate Mozilla’s 25th anniversary. What do you hope people are celebrating in the next 25 years? That’s a nice question. I hope that we will be celebrating an internet that is not governed by big tech corporations, but based on public values and ethics. I think some of the smaller steps in that can be, for example, currently in Europe, one of the foundations of processing your data is having informed consent. Informed consent is such a beautiful term originating from the medical field where a doctor kind of gives you information about the procedure and the possibility, risks and everything. But on the internet, that is just kind of applied in this small button like, УHey, click hereФ and give up all your rights and continue browsing without questioning. And I think one step is to kind of get a real, proper, fair way of getting consent, or maybe even switching it around, where the infrastructure of data collection is not the default, but instead itТs Уdo not collect anything without your consent.Ф We’re currently in a transition phase where there are a lot of very important alternatives to avoid big tech applications. Think about how Firefox already doing so much better than all these alternatives. But I think, at the core, like all our basic default apps should not be encouraged by commercial driven, very toxic incentives to just modify your data, and that has to do with how we design this infrastructure, the policies and the legislations, but also the technology itself and the kind of protocol layers of how itТs working. This is not something that we can change overnight. I hope that we’re not only thinking in alternatives to avoid kind of these toxic applications, big corporations, but that not harming your data, your equality, your fairness, your rights are the default. In our physical world, we value things like democracy and equality and autonomy and freedom of choice. But on the internet, that is just not present yet, and I think that that should be at the core at the foundation of building a digital world as it should be in our current world. What gives you hope about the future of our world? Things like Rise25, to be honest. I think it was so special. I spoke about this with other winners as well, like, we’re all just so passionate about what we’re doing. Of course, we have inspiration from people around us, or also other people doing work on this, but we’re still not with so many and to be united in this way just gives a lot of hope. Get FirefoxGet the browser that protects what’s importantThe post Julia Janssen creates art to be an ambassador for data protection appeared first on The Mozilla Blog. | ↑ |

At Mozilla, we know we canТt create a better future alone, that is why each year we will be highlighting the work of 25 digital leaders using technology to amplify voices, effect change, and build new technologies globally through our Rise 25 Awards. These storytellers, innovators, activists, advocates, builders and artists are helping make the internet more diverse, ethical, responsible and inclusive. This week, we chatted with Abbie Richards, a former stand-up comedian turned content creator dominating TikTok as a researcher, focusing on understanding how misinformation, conspiracy theories and extremism spread on the platform. She also is a co-founder of EcoTok, an environmental TikTok collective specializing in social media climate solutions. We talked with Abbie about finding emotional connections with audiences, the responsibility of social media platforms and more. First off, what’s the wildest conspiracy theory that you have seen online? It’s hard to pick the wildest because I don’t know how to even begin to measure that. One that I think about a lot, though, is that I tend to really find the spirituality ones very interesting. There’s the new Earth one with people who think that the earth is going to be ascending into a higher dimension. And the way that that links to climate change Ч like when heat waves happen, and when the temperature is hotter than normal, and theyТre like Уit’s because the sun’s frequency is increasing because we’re going to ascend into a higher dimension.Ф And I am kind of obsessed with that line of thought. Also because they think that if you, your soul, vibrate at a high enough frequency Ч essentially, if your vibes are good enough Ч you will ascend, and if not, you will stay trapped here in dystopian earth post ascension which is wild because then you’re assigning some random, universal, numerical system for how good you are based on your vibrational frequency. Where is the cut off? At what point of vibrating am I officially good enough to ascend, or am I going to always vibrate too low? Are my vibes not good? And do I not bring good vibes to go to your paradise? I think about that one a lot. As someone who has driven through tons of misinformation and conspiracy theories all the time, what do you think are the most common things that people should be able to notice when they need to be able to identify if something’s fake? So I have two answers to this. The first is that the biggest thing that people should know when they’re encountering this information and conspiracy theories online is that they need to check in with how a certain piece of information makes them feel. And if it’s a certain piece of information that they really, really want to believe, they should be especially skeptical, because that’s the number one thing. Not whether they can recognize something like that or if AI-generated human ears are janky. It’s the fact that they want to believe what the AI generated deepfake is saying and no matter how many tricks we can tell them about symmetry and about looking for clues that it is a deepfake, fundamentally, if they want to believe it, the thing will still stick in their brain. And they need to learn more about the emotional side of encountering this misinformation and conspiracy theories online. I would prioritize that over the tiny little tricks and tips for how to spot it, because really, it’s an emotional problem. When people lean into and dive into conspiracy theories, and they fall down a rabbit hole, it’s not because they’re not media literate enough. Fundamentally, it’s because it’s emotionally serving something for them. It’s meeting some sort of emotional psychological epistemic need to feel like they have control, to feel like they have certainty to feel like they understand things that other people don’t, and they’re in on knowledge to feel like they have a sense of community, right? Conspiracy theories create senses of community and make people feel like they’re part of a group. There are so many things that it’s providing that no amount of tips and tricks for spotting deepfakes will ever address. And we need to be addressing those. How can we help them feel in control? How can we help them feel empowered so that they don’t fall into this? The second to me is wanting to make sure that we’re putting the onus on the platforms rather than the people to decipher what is real and not real because people are going to consistently be bad at that, myself included. We all are quite bad at determining what’s real. I mean, we’re encountering more information in a day than our brains can even remotely keep up with. It’s really hard for us to decipher which things are true and not true. Our brains aren’t built for that. And while media literacy is great, there’s a much deeper emotional literacy that needs to come along with it, and also a shifting of that onus from the consumer onto the platforms.  What are some of the ways these platforms could take more responsibility and combat misinformation on their platforms? It’s hard. I’m not working within the platforms, so it’s hard to know what sort of infrastructure they have versus what they could have. It’s easy to look at what they’re doing and say that it’s not enough because I don’t know about their systems. It’s hard to make specific recommendations like Уhere’s what you should be doing to set up a more effective ЕФ. What I can say is that, without a doubt, these mega corporations that are worth billions of dollars certainly have the resources to be investing in better moderation and figuring out ways to experiment with different ways. Try different things to see what works and encourage healthier content on your platform. Fundamentally, that’s the big shift. I can yell about content moderation all day, and I will, but the incentives on the platforms are not to create high quality, accurate information. The incentives on all of these platforms are entirely driven by profit, and how long they can keep you watching, and how many ads they can push to you, which means that the content that will thrive is the stuff that is the most engaging, which tends to be less accurate. It tends to be catering to your negative emotions, catering to things like outrage and that sort of content that is low quality, easy to produce, inaccurate, highly emotive content is what is set up to thrive on the platform. This is not a system that is functional with a couple of flaws, this misinformation crisis that we’re in is very much the results of the system functioning exactly as it’s intended. What do you think is the biggest challenge we face in the world this year on and offline? It is going to be the biggest election year in history. We just have so many elections all around the world, and platforms that we know don’t serve healthy, functional democracy super well, and I am concerned about that combination of things this year. What do you think is one action that everybody can take to make the world, and our online lives, a little bit better? I mean, log off (laughs). Sometimes log off. Go sit in silence just for a bit. Don’t say anything, don’t hear anything. Just go sit in silence. I swear to God it’ll change your life. I think we are in a state right now where we are chronically consuming so much information, like we are addicted to information, and just drinking it up, and I am begging people to at least just like an hour a week to not consume anything, and just see how that feels. If we could all just step back for a little bit and log off and rebel a little bit against having our minds commodified for these platforms to just sell ads, I really feel like that is one of the easiest things that people can do to take care of themselves. The other thing would be check in with your emotions. I can’t stress this enough. Like when you encounter information, how does that information make you feel? How much do you want to believe that information and those things. So very much, my advice is to slow down and feel your feelings. We started Rise25 to celebrate MozillaТs 25th anniversary, what do you hope people are celebrating in the next 25 years? I hope that we’ve created a nice socialist internet utopia where we have platforms that people can go interact and build community and create culture and share information and share stories in a way that isn’t driven entirely by what’s the most profitable. I’d like to be celebrating something where we’ve created the opposite of a clickbait economy where everybody takes breaks. I hope that that’s where we are at in 25 years. What gives you hope about the future of our world? I interact with so many brilliant people who care so much and are doing such cool work because they care, and they want to make the world better, and that gives me a lot of hope. In general. I think that approaching all of these issues from an emotional lens and understanding that, people in general just want to feel safe and secure, and they just want to feel like they know what’s coming around the corner for them, and they can have their peaceful lives, is a much more hopeful way to think about pretty scary kind of political divides. I think that there is genuinely a lot more that we have in common than there are things that we have differences. It’s just that right now, those differences feel very loud. There are so many great people doing such good work with so many different perspectives, and combined, we are so smart together. On top of that, people just want to feel safe and secure. And if we can figure out a way to help people feel safe and secure and help them feel like their needs are being met, we could create a much healthier society collectively. Get FirefoxGet the browser that protects what’s importantThe post Abbie Richards on the wild world of conspiracy theories and battling misinformation on the internet appeared first on The Mozilla Blog. | ↑ |

Technology and Artificial Intelligence (AI) are just about everywhere, all the time Ч and thatТs even more the case for the younger generation. We rely on apps, algorithms and chatbots to stay informed and connected. We work, study and entertain ourselves online. We check the news, learn about elections and stay informed during crises through screens. We monitor our health using smart devices and make choices based on the results and recommendations displayed back to us. Very few aspects of our lives evades digitization, and even those that remain analog require intention and mindfulness to keep it that way. In this context and with the rise of AI and a growing generation of teens relying on the internet for learning, entertainment and socializing, now more than ever, itТs important to find ways to involve them in conversations about how technology influences their lives, their communities and the future of the planet. Tech isnТt all or nothing Ч itТs something in between The message adults used early on when it came to discussing technology with teens was УdonТt.Ф DonТt spend too much time on your phone. DonТt play video games. DonТt use social media. This old-school method proved ineffective and, in some cases, counterproductive. More recently, things have changed, and in some cases, adults have welcomed technology into their homes with open arms, sometimes without critique or caution. At the same time, schools have put great emphasis on training teens in web development and robotics in order to prepare them for the ever-changing job market. But before slow-motion running on a beach into a full embrace with technology, pause for a moment to reflect on the power of what we hold in our hands, and consider the profound ways in which it has reshaped our world. ItТs as if adults jumped between two extreme poles: from utter dystopia, to bright and shiny techno-solutionism, skipping the nuances in between. So, how can adults prepare to foster discussions with teens about technological ethics and the impacts of technology? How can teens be involved and engaged in conversations about their relationship to the technologies they use? We share the future with younger generations, so we need to involve them in these conversations in meaningful ways. Teens donТt just want to have fun, they want to talk about what matters to them At Tactical Tech, weТre always coming up with creative, sometimes unconventional ways to talk about technology and its impacts. We make public exhibitions in unexpected places. We create toolkits and guides in surprising formats. But to make interventions specifically for teens, we knew we needed to get them involved. We asked almost 300 international teens what matters to them, what they worry about and what they expect the future to be like. The results gave us goosebumps. Here are just a few quotes:

But not all of their responses were so despairing. They also had dreams about technology being used to help us, such as through education and medical advancements.

We also asked 100 international educators what they needed in order to confidently talk to teens about technology. Educators felt positive about our creative, and non-judgmental approach. They encouraged us to make resources that are even more playful, to add more relatable examples and to make sure the points are as concrete and transferable as possible. Breaching serious topics in fun and creative ways With all that in mind, we co-created Everywhere, All The Time, a fun-yet-impactful learning experience for teens. Educators can use it to encourage young people to talk about technology, AI and how it affects them. Everywhere, All The Time, can be used to create a space where they can think about their relationship with technology and become inspired to make choices about the digital world they want to live in. This self-learning intervention includes a package with captivating posters and activities about:

The package, created by Tactical TechТs youth initiative, What the Future Wants, includes provocative posters, engaging activity cards and a detailed guidebook that trains educators to facilitate these nuanced and sometimes delicate conversations. Whether you work in a school, library, community center or want to hang it up at home, you can use the Everywhere, All The Time posters, activity cards and guidebook to start conversations with teens about the topics they care about. Now is the moment to come together and involve teens in these conversations. Get FirefoxGet the browser that protects what’s importantThe post Unboxing AI with the next generation appeared first on The Mozilla Blog. | ↑ |

At Mozilla, we know we canТt create a better future alone, that is why each year we will be highlighting the work of 25 digital leaders using technology to amplify voices, effect change, and build new technologies globally through our Rise 25 Awards. These storytellers, innovators, activists, advocates, builders and artists are helping make the internet more diverse, ethical, responsible and inclusive. This week, we chatted with Finn Lutzow-Holm Myrstad, a true leader of development of better and more ethical digital policies and standards for the Norwegian Consumer Council. We talked with Finn about his work focusing on tech policy, the biggest concerns in 2024 and ways people can take action against those in power. You started your journey to the work you do now when you were a little kid going to camps. What did that experience teach you that you appreciate now as an adult? Since CISV International gathered children from around the world and emphasized team building and friendship, it gave me a more global perspective and made me realize how we need to work together to solve problems that concern us all. Now that the internet is spreading to all corners of the world and occupying a larger part of our lives, we also need to work together to solve the challenges this creates. ThereТs a magnitude of subjects of concern in the tech policy space, especially considering how rapid tech is evolving in our world. Which area has you most concerned? Our freedom to think and act freely is under pressure as increased power and information asymmetries put people at an unprecedented disadvantage, which is reinforced by deceptive design, addictive design and artificial intelligence. This makes all of us vulnerable in certain contexts. Vulnerabilities can be identified and reinforced by increased data collection, in combination with harmful design and surveillance-based advertising. Harm will probably be reinforced against groups who are already vulnerable. What do you think are easy ways people can speak up and hold companies, politicians, etc. accountable that they might not even be thinking about? This is not easy for the reason outlined above. Having said that, we all need to speak up. Talk to your local politicians and media about your concerns, and try to use alternative tech services when possible (for example: for messaging, browsing and web searches.) However, it is important to stress that many of the challenges need to be dealt with at the political and regulatory level. What do you think is the biggest challenge we face in the world this year on and offline? How do we combat it? I see the biggest challenges as interlocked with each other. For example, this year in 2024, at least 49 percent of people in the world are meant to hold national elections. The decrease in trust in democracy and public institutions is a threat to freedom and to solving existential problems such as the climate emergency. Technology can be a part of solving these problems. However, that lack of transparency and accountability, in combination with power concentrated in a few tech companies, algorithms that favor enraging content, and a large climate and resource footprint, are currently part of the problem and not the solution. We need to hold companies to account and ensure that fundamental human rights are respected.  What is one action that you think everyone should take to make the world and our lives online a little better? Pause to think before you post is generally a good rule. If we all took a bit more care when posting and resharing content online, we could probably contribute to a less polarized discussion and maybe also get less addicted to our phones. We started Rise25 to celebrate MozillaТs 25th anniversary, what do you hope people are celebrating in the next 25 years? That we managed to use the internet for positive change in the world, and that open internet is still alive. What gives you hope about the future of our world? That there is increased focus from all generations on the need for collective action. Together, I hope we can solve big challenges like the climate crisis and securing a free and open internet. Get FirefoxGet the browser that protects what’s importantThe post Finn Myrstad reflects on holding tech companies accountable and ensuring that human rights are respected appeared first on The Mozilla Blog. | ↑ |

(To read the complete Mozilla.ai publication featuring all our OSS contributions, please visit the Mozilla.ai blog) Like our parent company, Mozilla.aiТs founding story is rooted in open-source principles and community collaboration. Since our start last year, our key focus has been exploring state-of-the-art methods for evaluating and fine-tuning large-language models (LLMs). Throughout this process, weТve been diving into the open-source ecosystem around LLMs. What weТve found is an electric environment where everyone is building. As Nathan Lambert writes in his post, УItТs 2024, and they just want to learn.Ф УWhile everything is on track across multiple communities, that also unlocks the ability for people to tap into excitement and energy that theyТve never experienced in their career (and maybe lives).Ф The energy in the space, with new model releases every day, is made even more exciting by the promise of open source where, as IТve observed before, anyone can make a contribution and have it be meaningful regardless of credentials, and there are plenty of contributions to be made. If the fundamental question of the web is, У Why wasnТt I consulted,Ф open-source in machine learning today offers the answer, УYou are as long as you can productively contribute PRs, come have a seat at the table.Ф Even though some of us have been active in open-source work for some time, building and contributing to it at a team and company level is a qualitatively different and rewarding feeling. And it’s been especially fun watching upstream make its way into both the communities and our own projects. At a high level, hereТs what weТve learned about the process of successful open-source contributions: 1. Start small when youТre starting with a new project. If youТre contributing to a new project for the first time, it takes time to understand the projectТs norms: how fast they review, who the key people are, their preferences for communication, code review style, build systems, and more. ItТs like starting a new job entirely from scratch. 2. Be easy to work with. There are specific norms around working with open source, and they closely follow this fantastic post of understanding how to be an effective developer – УAs a developer you have two jobs: to write code, and be easy to work with.Ф In open source, being easy to work with means different things to different people, but I generally see it as: a. Submitting clean PRs with working code that passes tests or gets as close as possible. No one wants to fix your build. b. Making small code changes by yourself, and proposing larger architecture changes in a group before getting them down in code for approval. Asking УWhat do you think about this?Ф Always try to also propose a solution instead of posing more problems to maintainers: they are busy! c. Write unit tests if youТre adding a significant feature, where significant is anything more than a single line of code. d. Remembering ChestertonТs fence: that code is there for a reason, study it before you suggest removing it. 3. Assume good intent, but make intent explicit. When youТre working with people in writing, asynchronously, potentially in other countries or timezones, itТs extremely easy for context, tone, and intent to get lost in translation. Implicit knowledge becomes rife. Assume people are doing the best they can with what they have, and if you donТt understand something, ask about it first. 4. The AI ecosystem moves quickly. Extremely quickly. New models come out every day and are implemented in downstream modules by tomorrow. Make sure youТre ok with this speed and match the pace. Something you can do before you do PRs is to follow issues on the repo, and follow the repo itself so you get a sense for how quickly things move/are approved. If youТre into fast-moving projects, jump in. Otherwise, pick one that moves at a slower cadence. 5. The LLM ecosystem is currently bifurcated between HuggingFace and OpenAI compatibility: An interesting pattern has developed in my development work on open-source in LLMs. ItТs become clear to me that, in this new space of developer tooling around transformer-style language models at an industrial scale, you are generally conforming to be downstream of one of two interfaces: a. models that are trained and hosted using HuggingFace libraries and particularly the HuggingFace hub as infrastructure. b. Models that are available via API endpoints, particularly as hosted by OpenAI. 6. Sunshine is the best disinfectant. As the recent xz issue proved, open code is better code and issues get fixed more quickly. This means, donТt be afraid to work out in the open. All code has bugs, even yours and mine, and discovering those bugs is a natural process of learning and developing better code rather than a personal failing. WeТre looking forward to both continuing our contributions, upstreaming them and learning from them as we continue our product development work. Get FirefoxGet the browser that protects what’s importantThe post Open Source in the Age of LLMs appeared first on The Mozilla Blog. | ↑ |

At Mozilla, we know we canТt create a better future alone, that is why each year we will be highlighting the work of 25 digital leaders using technology to amplify voices, effect change, and build new technologies globally through our Rise 25 Awards. These storytellers, innovators, activists, advocates, builders and artists are helping make the internet more diverse, ethical, responsible and inclusive. This week, we chatted with Marek Tuszynski, an artist and curator that is the Executive Director and co-founder of Tactical Tech, a program dedicated to supporting initiatives focused on promoting better privacy and digital rights. We talked with Marek about his travels abroad shaping his career, what sparks his inspiration and future challenges we face online. So the first question that I had that I wanted to ask you about this is very straightforward, but what initially inspired you to co-found Tactical Tech? What was the first thing that made you really want to start the work that you do? Marek Tuszynski: Things were not looked at the ways that I thought it’s important to look at them, especially technical things that have been around for over 20 years now. But the truth is, it was actually more excitement. Excitement that there’s such a massive opportunity, such a massive chance for many different people Ч actors, places, nations Ч to do things different and outside of the constraints that they are in, where they are at the time, and so on. I worked internationally at the time in sub-Saharan Africa and Southeast Asia and was just thinking that a lot of tech is being damned there in these places Ч I’m also from Eastern Europe, so we’ve never seen the leading edge of the technology, we’ve seen the tail if we’re lucky if the censorship allowed. So I just wanted to use technology as not only an opportunity that I have been kind of given by chance Ч it just happened I was in the right place in the right time Ч but also to bring it, and to co-develop it and do things together with people. My focus early on was with open source with software, and that was the driving force behind how we think about which technology is better for society, which is a more right one, which gives you more freedoms, not restrict them, and so on, etc. The story now, as we know, it turned kind of dark, but the inspiration was fascination with the possibilities of technology in terms of the tool for how we can access knowledge and information, the tool for how we can refine the way we see the world. It’s still there, and I think was there at the very beginning, and I think that’s a major force. You mentioned some of the traveling that you did. I’m very much of the belief that traveling is one of the best ways for us to learn. How much did the traveling and the places that you’ve been to and that you’ve seen influence or impact the work that you did and give you a bigger perspective on some of the things that you wanted to do? That’s interesting because I come from the art background and one of my fascinations was a period in history of ours where some of the makers spent some of their time when they were learning on traveling. To go and visit other artists or studios, vendors and makers, etc., to see how they do things because there was no other way of information to travel, and for me that was very important. But I think you are right. It is not cool to talk about traveling these days, because travel also means burning fossil fuels and all this kind of stuff Ч which is unfortunately true, but you can do other travel, IТm doing a lot of sailing these days. But the initial traveling for me, coming from an entirely restricted part of the world and where I was growing up, I actually thought, УI’m never going to travelФ in my mind. This is like something I’m going to read in the books, see on films, but never experience because I canТt get a passport. I couldn’t get the basic rights to leave the border that I was constrained with. So the moment I could do that I did it, and I practically never come back. I’ve left the place I’m from 35 years ago. The question that you’re asking is very important because travel teaches you a lot of things, and you become much more humble. Your eyes and brain and heart opens up much more. And you see that the world is unique, that people around you are unique Ч you’re not that unique yourself and thereТs a lot to learn from that. Initially, when you travel, you have this arrogance Ч I had this arrogance at least Ч that I’m going to go to places, learn them, understand them, and turn into something, etc. And then you go, and you learn, and you never stop. Learning is endless, and that’s the most fascinating part. But I think that the biggest privilege was to meet people and meet them on their own ground with their own ideas about everything. And then you confront yourself and rip the way you frame things because you just come from a place with ideas that somebody else put into your education system. So for me, yeah, it (traveling) worked in a way. And it’s not necessarily the travel in the physical space that we need. Now you can do it virtually like we have the conversation, and you get to know people and, you travel in some way. You may not see the actual architecture of the space you are in, but you are seeing УOkay, this is a person coming from somewhere with a certain set of ideas, questions, and so on, etc.Ф You look at them and think how we can have the conversation knowing that we come from very different places. Travel gives you that. When it gets to like some of the ideation process, what sparks the inspiration for the experiences you try to create? Is there any research or data that you look at? Are there just trends that you look at to get inspired by? What kind of like starts that? ThereТs a mixture. I think weТre probably the least analyzing organization. Even if itТs a trend or some kind of mainstream stuff with the social media or all media, etc., it’s too late. I think for me personally, it’s always observing whatТs being talked about. With AI, itТs what aspects of AI actually are being totally omitted. There’s a lot of focus now, for example, on elections and the visible impact of the AI, so how we can amplify this information, confusion and basically deteriorate trust into what we see, what we hear, what we read. And it’s super important. People are going to learn that very quickly. I think what is happening is the invisible part, where businesses that are interested in influencing political or non-political opinions around issues that are critical for people will be using AI for analyzing the data. For hyper fast profiling of people in much more clever ways of addressing them with more clever advertisements, in that it won’t necessarily be paid Ч it doesn’t have to be. Or even how you design certain strategies, etc. that can be augmented by how you use AI. And I think this is where I will be focusing on rather than talking about that the deepfakes which weТve done already. But to the core of the question you asked about how we have topics that we focus on, from the first day of Tactical Tech, we’ve always based our work on partners, collaborators and people that we work with Ч and often that we are invited to work with like a group of people or institutional organizations, etc. And good listening is for me part of the critical research. So instead of coming in a view and ideas of research questions, we listen to what questions are already there. Where the curiosity is, where the fears are, where the hopes are, and so on, and sometimes start to build backwards toward and think about what you can bring from the position you occupy. Is there one collaboration or organization that you’ve worked with before that you felt was really impactful that you’re the most proud of? There are hundreds of those (laughs). We had this group of people from Tajikistan Ч many people don’t know what Tajikistan is. And they were amazing. They were basically like a sponge, just sucking everything in and trying to engage with the culture cross between us and them and everybody else and people from probably other countries and so on Ч it was massive. But everybody was positive and trying to figure out some kind of common language that is bizarre English being mixed with some other languages, and so on. And that first encounter turned into a friendship and collaboration that led them to be the key people that brought the whole open source tool to the school system in Tajikistan, and so on, etc. I think that was one of these examples where it was very positive, encouraging. We do a lot of collaboration. So we have hundreds of partners now, especially on our educational work. And I think the most successful series was with a number of groups in Brazil that we collaborated with because they really took the content and the collaboration to the next level and took ownership of that. And for me, the ideal scenario over the work we do is that we may be developing something on the stage together, but there should be a moment when we disappear from the stage and somebody else take that stage. And that’s what happened. And that’s just beautiful to see. And when somebody tells you about this product that is amazing, and they don’t even know that you were part of that, that is the best compliment you can get.  What do you think is the biggest challenge we face in the world this year on and offline? How do we combat it? I think people have different challenges in different places. The first thing that we are going to launch is a series of election influence situation rooms, which is just kind of a creative space that has the front of the house and back of the house that you would like to mount around some of the elections that are happening Ч like U.S. or European Parliament, or many other elections. What we would like to showcase and kind of demystify is not only the way we elect people, but how much the entire system works or doesn’t work. And what weТre trying to illustrate with this project “the situation roomsФ is to unpack it and show how important, for a lot of entities, confusion is. How important polarization is, how important the lack of trust towards specific formats of communication are, or how institutions fail, because in this environment it is much easier to put forward everything from conspiracy theories to fake information, but it also makes people frustrated. It makes people to be much more black and white and aligning themselves with things that they would not align with earlier. I think this is the major thing that we are focusing on this year. So the set of elections and how we can get people engaged without any paranoia and any kind of dystopian way of thinking about it. It helps ourselves in a way to build apparatus for recognizing this situation we are in. And then let’s build some methods for what we need to know, to understand what is happening and then how that can be useful for democratic person elections, but how also it can be extremely harmful. What do you think is one action that everyone should take to make the world and our lives online a little bit better? Technology is the bridge. Technologies open the channel of communications, so on, etc. Use technology for that. Don’t focus on yourself as much as you can focus on the world and other people. And if technology can help you to understand where they’re coming from, what the needs are and what kind of role you can play from whatever level of privilege you may have, use it. And the fact that you may use better technologies and some people have access to it already, thatТs a certain kind of privilege that I think we should be able to share widely. You use technology to engage other people who don’t want to engage. Who lost trust. Don’t give up on them. Don’t give up on people on the other side, those voting for people you don’t have any respect. They are lost. And part of the reason they are lost is the technology they’re using. How it is challenging the information and making them believe in things that they shouldnТt be believing in and that they probably wouldn’t if that technology didnТt happen. I think we passed this kind of libertarian way of using technology for individual good that’s going to turn everything into a better world. We have proven itself wrong many times by now. We started Rise25 to celebrate MozillaТs 25th anniversary, what do you hope people are celebrating in the next 25 years? I think Rise 25 was fantastic because it was nice to see all the kinds of people. The 25 people who are so different in such different ways thinking about tech and ideas, and so on, etc., and so diverse in many ways that you don’t see very often. And it gave people the space to actually vocalize what they think and so on. And that was definitely unique. So I think Mozilla plays a specific role in this kind of in-between sector thing where itТs a corporation in a city in San Francisco that builds tools, but it’s also a foundation. Mozilla has unique access to people around the world that do a lot of creative work around technology. And I think that should be celebrated. I don’t think there’s enough of that. Usually people are celebrated for what they achieve with technology, usually making a lot of money, and so on, etc., there’s very little to talk about the technology that has positive impact on society in the world. And I think Mozilla should be touching and showcasing that, and I think there are plenty of things that can be celebrated. What gives you hope about the future of our world? You know, it’s going to be okay. Don’t worry. But we are going to see people that’ll be happy and there will be people that separate. I think the more we can do now for the next generations of people that are coming up now, the better. And if you’re lucky to live long, you may feel proud for what you’ve been doing Ч focus on that, rather than imagining or picturing some future machine. Get FirefoxGet the browser that protects what’s importantThe post Marek Tuszynski reflects on curating thought-provoking experiences at the intersection of technology and activism appeared first on The Mozilla Blog. | ↑ |

At Mozilla, we know we canТt create a better future alone, that is why each year we will be highlighting the work of 25 digital leaders using technology to amplify voices, effect change, and build new technologies globally through our Rise 25 Awards. These storytellers, innovators, activists, advocates, builders and artists are helping make the internet more diverse, ethical, responsible and inclusive. This week, we chatted with creator Rachel Hislop, a true storyteller at heart that is currently the VP of content and editor-in-chief at OkayAfrica. We talked with Rachel about the ways the internet allows us to tell our own stories, working for Beyonce and whatТs to come in the next chapter of her career. So the first question I have is what was your favorite Beyonce project to work on? I want to know. Also, what’s your favorite Beyonce album? IТll say that my favorite project to work on was definitely Lemonade. It was just so different from anything that had ever existed. It was so culturally relevant. It was well-timed. It was honest and just really beautiful. You can sometimes be jaded by the work when you’re too close to it, and I think that’s across the board in any industry, but there was never a moment of working on that project that I didn’t understand and appreciate just how beautiful everything was. It was really just like, all of you people that I see at work every day, this came out of your minds? And then, it was just a lot of love, and it was a really important time in culture, I think. And I really enjoyed being part of that. My favorite Beyonce album Е I don’t know that I can answer that because every part has had a very important impact on my life. I had The Writings on the Wall on a cassette tape that I used to play in my little boombox. And Dangerously in Love, I was in high school and experiencing little crushes for the first time. The albums grew. I met Sasha Fierce when I’m in college and learning the dualities of self when I’m away from home, and so on and so forth. So, it’s hard to pick a favorite when each of those moments were so tied to different portions of my life. I’m curious to know what types of stories pull you in and influence the work that you do as a writer? It’s living, honestly. I’m a really curious person, and I think all the best writers are. ItТs even weird calling myself a writer sometimes because so much of what I write right now is just for me, and projects that I really believe in. It’s really just the curiosity. I want to know how everything went. How did you get here? What inspired you? I’m good for going out alone and sitting next to a stranger and then learning their whole life story because I am just truly interested in people and there is no parallel in lived life. I also love reading fiction. I am not the girl that’s like, “yeah, self-help books and self-improvement” and things like that. I want to fully escape into a story. I want to be able to turn my brain on and imagine things and fully detach and escape from this world. And then I love reading old magazine articles from when people were allowed to have long, luxurious deadlines and follow subjects for a really long time. I remember this article that they would make us read in journalism school, which was Frank Sinatra has a cold, and I love that voyeurism journalism where if you can’t get to the subject, you’re talking about the things around them. Everything is so interesting to me. I also love TikTok. I learn so much. I think that it’s a really valuable form of storytelling. In short-form, I think it’s really hard Ч itТs harder than people give a lot of these creators credit for. My grand hope is that those small insights through those short videos are peaking curiosity and sending people on rabbit holes to go discover and read and just be deeper into the internet. I remember back in college there was this internet plugin called stumbled upon and it would roulette the internet and land on these random pages and learn things. That is how my brain is always processing. I’ll see something that’ll interest me and then be like, УI want to know more about that.Ф  You’ve been in the content media space for a while. What do you think is the biggest misconception people have about working in this space? You know that saying that if you do what you love every day, you’ll never work a day in your life? I think it’s that. There is a pressure that you feel when you are following your actual passion. This is not just my job, this is what I like to do in my free time, this is what I think is important. This is what I feel like I was born to do, right? To storytell. And every day, it feels like work. And it feels even harder than work because it feels like a calling. From the outside, I’m sure people are like, УOh, you get to do this cool stuff. You get to talk to all these interesting people. You get to talk about the things that you care about.Ф But there is truly a pressure to document this stuff in a way that pays homage to everyone and everything that came before it that solidifies its place in history to come, and to handle things with delicacy and care and importance. There’s just so many layers to it. So, it’s never just about the one thing that you love. It’s about the responsibility that you now have to this thing because you love it. And when you’re working in culture spaces specifically, there is always someone that you have to give their flowers to for you to be able to do the work that you’re doing, but you also have to be forward. You have to look forward and innovate, while you are also honoring what has come before. And there can be a misconception about it like it being easy. Or УI can do thatФ Ч we see a lot of that with interviewers I love, where people say, УShannon Sharpe didn’t ask the right questions. Insert podcaster here who got a really great hit and didn’t ask the right questions.Ф ItТs because we’ve lost the art because people see the end product, they believe that it is easy. And they forget that everything that journalists are doing is in service to the audience and not in service to themselves. And we’re seeing this really weird landscape now where everyone is in service to themselves and to their own popularity and to growing their own audiences, but then they don’t serve those audiences with ethics. That for me is the misconception Ч that it’s just so easy, anyone could do it. Now everyone is doing it, and they’re wondering where the value is and the premium stories and all of these things like that. Who are the Black women youТre inspired by and the people you go to when youТre faced with so many of the challenges Black people have in the content/media industry? I am very intentional about friendships, and I treat my friends like extensions of myself. They’re the board of directors for my life, and these are often friends from college. I think people really discount the friends that you make in your first big girl job and how you’re learning everything together. Those have become the people that I call on throughout my career, who I call on to collaborate with projects, who I call on when things are falling apart. I’ve been just so blessed to have those people in my life as my board of directors for all things. And I serve as the same for them. I am going to tell you the truth: I don’t know that I’ve met a Black woman that I’m not inspired by. I truly am just so in awe of so many women. I made a practice after the pandemic of being like, УI don’t want these people who I like online to just be my online friends.Ф I wanted to meet these people in real life. I started just DMТing people and being like, УHey, we’ve been on here a while. Can we grab lunch?Ф And then just continuing that connection in person has been so, so fantastic. I do also want to shout out Poynter Institute. I did a training with them in 2019 right before the pandemic for women in leadership positions in newsrooms. When I tell you it was a week-long, intensive, seven days we were in a classroom Е it was like therapy for work in a way that I didn’t know I needed, and I didn’t know was available to any of us. We built such strong bonds, even though some people worked at competing publications. But when we put all of that aside, it was just women of all ages and all backgrounds who were working in an industry and really, really cared about the work they were doing and wanted to be their best. And through that group of people, I have just made true, lifelong friends. When things were falling apart in 2020, I was calling on my cohort members and building deep, deep connections from there. What do you think is the biggest challenge we face in the world this year on and offline? How do we combat it? There’s so much happening in the world that I think the one thing that I can say that continues to be dangerous is misinformation online. I’m going to speak specifically about Palestine. We see the power of storytelling from the front lines in a way that we have never witnessed before with any conflict, right? And we’ve seen that unfold into just horrors that we would never know were happening if we did not see it coming from the front lines. I just give kudos to the journalists that are on the ground and the people who have become journalists by force who have documented us through situations that we couldn’t even fathom. Even if we tried, we couldn’t fathom what it’s like to work through that. And while that is really helpful and illuminating the evils of the world, the other side of that there’s so much misinformation because everyone is trying to be fast to discredit what we’re seeing with our own eyes and framing that used to be able to take place is not available anymore. And I’m not going to speak to that being a political tool or otherwise, but the framing is not as readily available. I think we saw this with our election in America. Specifically, we’re in an election year Ч we saw this with our elections, four, eight years ago Ч and now as technology starts to grow and change faster than we are learning how to master it, I really do believe that misinformation is going to be one of the hardest things to combat. I really don’t know how to combat it. Things are just changing so quickly and there’s just so much access to so much. I think the answer as it is with most things is community and people coming together to dream together. There’s not going to be a single person that solves for all of this. It’s going to take collective efforts to help make sure that we’re doing our best. What is one action that you think everyone should take to make the world and our lives online a little better? I think everyone can be a little bit more curious. We don’t need to trust things at face value the first time. We need to be more curious about the sources of the information that we are taking in. And it doesn’t mean that we have to be constantly engaging in combat with it. You can take things at face value and then do more research to inform yourself about all sides of a story, and I think that that’s one action that we can take in our day-to-day lives to just be better. We started Rise25 to celebrate MozillaТs 25th anniversary. What do you hope people are celebrating in the next 25 years? I hope we get those flying cars that we were promised (laughs). But truly, I hope that in 25 years, we are celebrating the earth more. I really do hope that we’re celebrating the earth still housing us and that we’re all just being a little kinder and more thoughtful in the ways that we’re engaging with nature and ourselves, and that people are spending a little bit more time reconnecting with who they are offline. What gives you hope about the future of our world? I mentor with the Lower East Side Girls Club, which is a nonprofit here in New York, and we do a mentee outing once a month. The girls are aged middle school through high school, and they are so bright and so well-rounded and smart, but they’re also funny, and they have great social cues and they think so deeply about the world and they’re really compassionate. They don’t allow the things that used to trip me up and trip me and my peers up as like middle schoolers, like they’re so evolved past that. Every time that I think that mentoring means me teaching, I realize that it really means me learning, and when I leave those girls, I’m like, Уalright, we’re going to be okay.Ф They give me some hope. Get FirefoxGet the browser that protects what’s importantThe post Rachel Hislop reflects on working for Beyonce, creating community for Black women and the power of storytelling appeared first on The Mozilla Blog. | ↑ |

Your tech choices matter more than ever. ThatТs why at Firefox, we believe in empowering users to make informed decisions that align with their values. In that spirit, we’re excited to announce our partnership with Qwant, a search engine that prioritizes user privacy and tracker blocking. Did you know you could choose the search engine of your choice right from your Firefox URL bar? Whether you prioritize privacy, climate protection, or simply want a search experience tailored to your preferences, we’ve got you covered. Qwant is a privacy-focused search engine that puts your needs first while protecting your personal data. By blocking trackers and advertisements, Qwant helps your search results remain unbiased and comprehensive. Just like Firefox, they are committed to protecting your privacy and preserving the decentralized nature of the web, where people have control over their online experiences. Together, Firefox and Qwant are contributing to a more open, inclusive web, and above all Ч one where you can make an informed choice about what tech you use, and why. Your tech choices make a difference. As Firefox continues to champion user empowerment and innovation, we invite you to join us in shaping a web that works for everyone. Together, let’s make a positive impactЧone search at a time. The post Empowering Choice: Firefox Partners with Qwant for a Better Web appeared first on The Mozilla Blog. | ↑ |

(To read the complete Mozilla.ai publication on LLM evaluation, please visit the Mozilla.ai blog) In a year marked by extraordinary advancements in artificial intelligence, largely driven by the evolution of large language models (LLMs), one factor stands out as a universal accelerator: the exponential growth in computational power. Over the last few years, researchers have continuously pushed the boundaries of what a СlargeТ language model means, increasing both the size of these models and the volume of data they were trained on. This exploration has revealed a consistent trend: as the models have grown, so have their capabilities. Fast forward to today, and the landscape has radically transformed. Training state-of-the-art LLMs like ChatGPT has become an immensely costly endeavor. The bulk of this expense stems from the staggering amount of computational resources required. To train an LLM, researchers process enormous datasets using the latest, most advanced GPUs (graphics processing units). The cost of acquiring just one of these GPUs, such as the H100, can reach upwards of $30,000. Moreover, these units are power-hungry, contributing to significant electricity usage. While there have been substantial efforts by researchers and organizations towards openness in the development of large language models, in addition to the compute challenges, three major hurdles have also hampered efforts to level the playing field:

The high compute requirements, coupled with the opaqueness and the complexities of developing and evaluating LLMs, are hampering progress for researchers and smaller organizations striving for openness in the field. This not only threatens to reduce the diversity of innovation but also risks centralizing control of powerful models in the hands of a few large entities. The critical question arises: are these entities prepared to shoulder the ethical and moral responsibilities this control entails? Moreover, what steps can we take to bridge the divide between open innovators and those who hold the keys to the leading LLM technology? Why LLMs make model evaluation harder than everEvaluation of LLMs involves assessing their performance and capabilities across various tasks and benchmarks and provides a measure of progress and highlights areas where models excel or need improvement. СTraditionalТ machine learning evaluation is quite straightforward: if we develop a model to predict lung cancer from X-ray images, we can test its accuracy by using a collection of X-rays that doctors have already diagnosed as either having cancer (YES) or not (NO). By comparing the model’s predictions with the doctor-diagnosed cases, we can assess how well it matches the expert classifications. In contrast, LLMs can complete an almost endless number of tasks: summarization, autocompletion, reasoning, generating recommendations for movies and recipes, writing essays, telling stories, generating good code, and so on. Evaluation of performance therefore becomes much, much harder. At Mozilla.ai, we believe that making open-source model fine-tuning and evaluation as accessible as possible is an important step to helping people own and trust the technology they use. Currently, this still requires expertise and the ability to navigate a rapidly evolving ecosystem of techniques, frameworks, and infrastructure requirements. We want to make this less overwhelming for organizations and developers, which is why weТre building tools that:

We think there will be a significant opportunity for many organizations to use these tools to develop their own small and specialized language models that address a range of meaningful use cases in a cost-effective way. We strive to empower developers and organizations to engage with and trust the open-source ecosystem and minimize their dependence on using closed-source models over which they have no ownership or control. Read the whole publication and subscribe to future ones in the Mozilla.ai blog. The post The cost of cutting-edge: Scaling compute and limiting access appeared first on The Mozilla Blog. | ↑ |

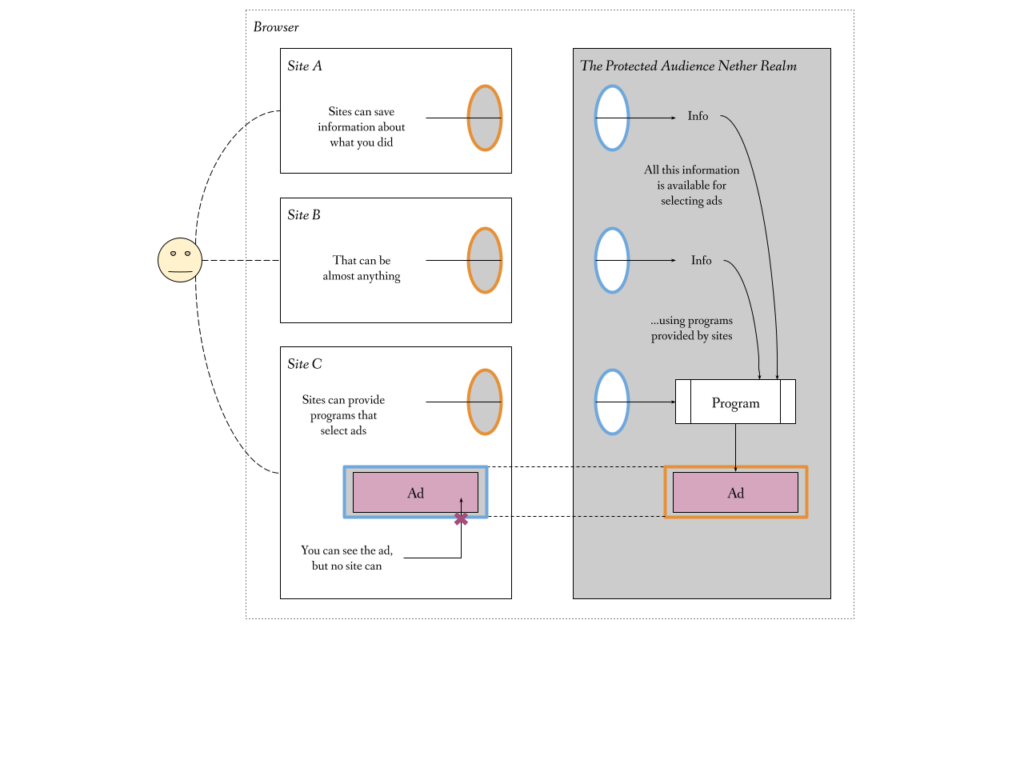

The announcement that Google would remove the ability to track people using cookies in its Chrome browser was met with some consternation from advertisers. After all, when your business relies so heavily on tracking, as is common in the advertising industry, removing the key means of performing that tracking is a bit of a big deal. Google relies on tracking too, but this change has the potential to skew the advertising market in GoogleТs favor. For online advertisers looking to perform individualized ad targeting, tracking is a significant important source of visitor information. Smaller ad networks and websites depend on being able to source information about what people do on other sites in order to be competitive in the ruthless online advertising marketplace. Without that, these smaller players might be less able to connect visitors with the Ч often shady Ч trade in personal data. On the other hand, entities like Google who operate large sites, might rely less on information from other sites. Losing the information that comes from tracking people might affect them far less when they can use information they gather from their many services. So, while the privacy gains are clear Ч reducing tracking means a reduction in the collection and trade of information about what people do online Ч the competition situation is awkward. Here we have a company that dominates both the advertising and browser markets, proposing a change that comes with clear privacy benefits, but it will also further entrench its own dominance in the massively profitable online advertising market. Protected Audience is a cornerstone of GoogleТs response to pressure from competition regulators, in particular the UK Competition and Markets Authority, with whom Google entered into a voluntary agreement in 2022. Protected Audience seeks to provide some counterbalance to the effects of better privacy in the advertising market. We find GoogleТs claims about the effect of Protected Audience on advertising competition credible. The proposal could make targeted advertising better for sites that heavily relied on tracking in the past. That comes with a small caveat: complexity might cause a larger share of advertising profits to go to ad tech intermediaries. However, the proposal fails to meet its own privacy goals. The technical privacy measures in Protected Audience fail to prevent sites from abusing the API to learn about what you did on other sites. To say that the details are a bit complicated would be something of an understatement. Protected Audience is big and involved, with lots of moving parts, but it can be explained with a simple analogy.  The idea behind Protected Audience is that it creates something like an alternative information dimension inside of your (Chrome) browser. In this alternative dimension, tracking what you saw and did online is possible. Any website can push information into that dimension. While we normally avoid mixing data from multiple sites, those rules are changed to allow that. Sites can then process that data in order to select advertisements. However, no one can see into this dimension, except you. Sites can only open a window for you to peek into that dimension, but only to see the ads they chose. Leaving the details aside for the moment, the idea that personal data might be made available for specific uses like this, is quite appealing. A few years ago, something like Protected Audience might have been the stuff of science fiction. Protected Audience might be flawed, but it demonstrates real potential. If this is possible, that might give people more of a say in how their data is used. Rather than just have someone spy on your every action then use that information as they like, you might be able to specify what they can and cannot do. The technology could guarantee that your choice is respected. Maybe advertising is not the first thing you would do with this newfound power, but maybe if the advertising industry is willing to fund investments in new technology that others could eventually use, that could be a good thing. Sadly, Protected Audience fails in two ways. To be successful, it must process data without leaks. In a complex design like this, it is almost expected that there will be a few holes in the design. However, the biggest problem is that the browser needs to stop websites from seeing the information that they process. Preventing advertising companies from looking at the information they process makes it extremely difficult to use Protected Audience. In response to concerns from these companies, Google loosened privacy protections in a number of places to make it easier to use. Of course, by weakening protections, the current proposal provides no privacy. In other words, to help make Protected Audience easier to use, they made the design even leakier. A lot of these leaks are temporary. Google has a plan and even a timeline for closing most of the holes that were added to make Protected Audience easier to use for advertisers. The problem is that there is no credible fix for some of the information leaks embedded in Protected AudienceТs architecture. A stronger Protected Audience might lead us to ask some fairly challenging questions. We might ask whether objections to targeted advertising arise solely from the privacy problems with current technology? Or, is it the case that targeted manipulation itself is the problem? Targeted advertising is more effective because it uses greater information about its audience Ч you Ч to better influence your decisions. So would a system that prevented information collection, but still allowed advertisers to exercise that influence, be acceptable? We would also need to decide to what extent a browser Ч a user agent Ч can justifiably act in ways that are not directly in the interests of its user. Protected Audience exists for the benefit of the advertising industry. A system that makes it easier to make websites supported by advertising has benefits. After all, advertising does have the potential to make content more widely accessible to people of different means, with richer people effectively subsidizing content for those of lesser means. A stronger Protected Audience might then provide people with a real, if indirect, benefit. Does that benefit outweigh the costs of giving advertisers greater influence over our decision-making? With Protected Audience as it is today, we can simply set those questions aside. In failing to achieve its own privacy goals, Protected Audience is not now Ч and maybe not ever Ч a good addition to the Web. Read our much longer analysis of Protected Audience for more details. The post GoogleТs Protected Audience Protects Advertisers (and Google) More Than It Protects You appeared first on The Mozilla Blog. | ↑ |